-

Dilated Causal Convolution from WaveNetData/Machine learning 2021. 3. 1. 13:20

Concept

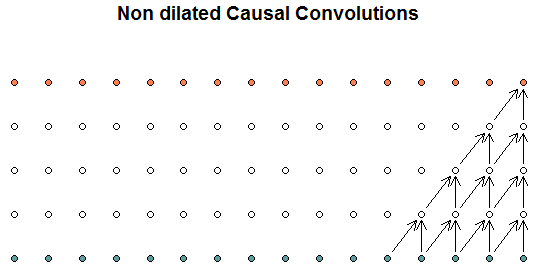

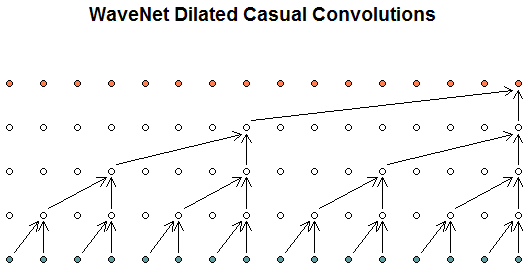

It was first proposed from a paper for WaveNet which was developed by Google to generate realistic-sounding speech from text. You can try text2speech of the wavenet here. A comparison between with the dilated causal convolution (DCC) and without it is shown in the following figure:

It can be observed that the DCC covers a longer time series, which allows the model to capture the global effect. Some studies on benchmark sequence data showed that the memory of this easy 1D convolution NN can in many examples even outperform the more complicated LSTM or GRU networks.

Example Results

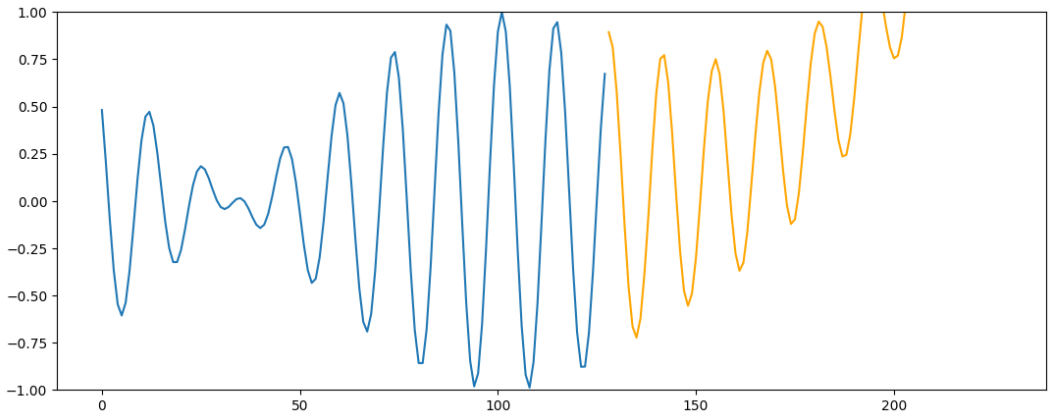

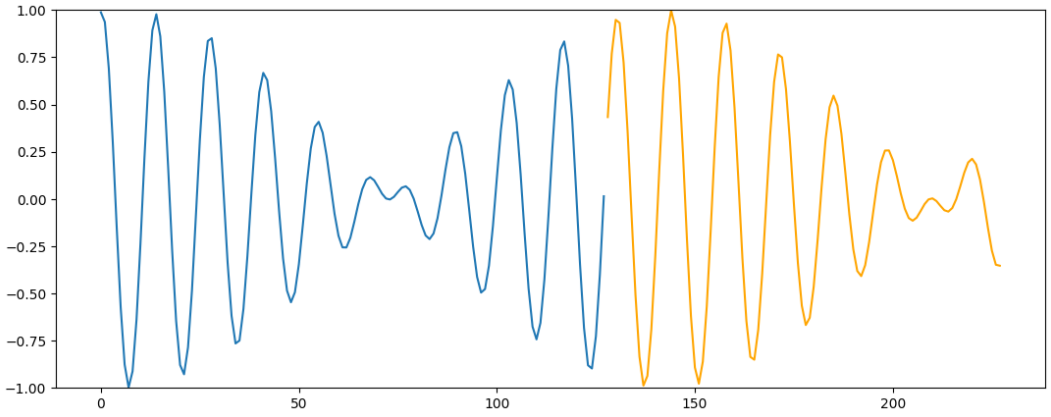

Example results of time series forecasting with and without the DCC are shown below:

(Left) without the DCC, (Right) with the DCC Reference: github.com/tensorchiefs/dl_book/blob/master/chapter_02/nb_ch02_04.ipynb

'Data > Machine learning' 카테고리의 다른 글

Cosine-similarity Classifier; PyTorch Implementation (0) 2021.03.17 [PyTorch] .detach() (0) 2021.01.28 [PyTorch] .detach() in Loss Function (0) 2021.01.28